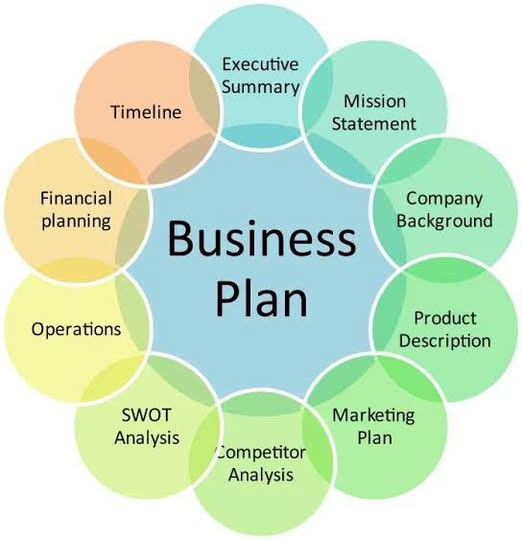

Executive Summary

The life sciences tools market, valued at over $100 billion annually, presents significant opportunities for companies that can effectively identify and engage with researchers at the right moment. However, sales teams currently face critical challenges: fragmented data sources, outdated information, and inefficient lead qualification processes that result in wasted effort on cold leads.

DreamozTech proposes building an AI-powered sales intelligence platform that transforms scattered research activity into clear, actionable insights. By leveraging big data and artificial intelligence, this platform will provide a real-time, dynamic view of emerging opportunities—moving beyond static databases to deliver intelligent sales enablement at scale.

The Challenge

Today's sales teams in the life sciences sector struggle with:

- Fragmented Data Sources: Relevant information is scattered across public repositories, institutional websites, patent databases, and social media platforms

- Outdated Information: Static databases fail to capture real-time changes in research activity, funding, and emerging opportunities

- Inefficient Lead Qualification: Sales representatives waste valuable time pursuing cold leads without clear signals of opportunity

- Lack of Contextual Intelligence: Without understanding a researcher's current focus and needs, outreach lacks relevance and impact

Our Solution

1. Unified Data Pipeline Architecture

Data Aggregation and Integration

We will build a robust, multi-source data pipeline that ingests information from:

- Public Repositories: PubMed for publications, ClinicalTrials.gov for clinical studies

- Institutional Sources: University and research center websites

- Patent Databases: USPTO, EPO, and other patent offices

- Professional Networks: LinkedIn, ResearchGate, and relevant social media platforms

- Funding Sources: NIH, NSF, and private foundation grant databases

- Conference Data: Major scientific conference attendance and presentations

Our pipeline will utilize APIs, web scraping technologies, and data connectors to continuously pull information into a centralized data lake, ensuring comprehensive coverage of the research landscape.

Data Cleansing and Standardization

Raw data will be processed through machine learning models to:

- Extract key entities (researcher names, affiliations, research topics) using Natural Language Processing (NLP)

- Implement named entity recognition and data deduplication

- Resolve inconsistencies across data sources

- Create a unified "single source of truth" for each researcher profile

2. AI-Driven Intelligence Layer

Opportunity Scoring and Lead Prioritization

Our AI model will score potential leads based on multiple signals:

- Recent publication activity and citation trends

- Grant funding status and award amounts

- Conference participation and speaking engagements

- Research topic evolution and emerging interests

- Lab expansion indicators (new hires, equipment purchases)

Using supervised learning trained on historical sales data and successful conversions, the model will predict lead "temperature" and prioritize warm opportunities, dramatically reducing time wasted on cold outreach.

Intelligent Recommendation Engine

The platform will feature a sophisticated recommendation system that:

- Uses collaborative filtering and content-based filtering to match researchers with relevant products

- Suggests specific reagents, equipment, or services based on current research activities

- Provides contextual talking points for sales conversations

- Identifies cross-sell and upsell opportunities

Real-Time Activity Monitoring

Using stream processing technologies (Apache Kafka, Spark Streaming), we will monitor:

- New publication releases

- Grant awards and funding announcements

- Conference attendance and presentations

- Lab personnel changes

- Social media activity indicating research direction shifts

This ensures the platform provides a truly dynamic view of opportunities as they emerge, enabling proactive rather than reactive sales engagement.

3. Scalable Technical Infrastructure

Cloud-Native Architecture

The platform will be built on a scalable cloud infrastructure using AWS, Google Cloud Platform, or Microsoft Azure, incorporating:

- Data Storage: Amazon S3 / Google Cloud Storage for raw data and data lakes

- Compute Resources: Elastic compute (EC2 / Compute Engine) with GPU support for model training

- Managed Databases: PostgreSQL for structured data, MongoDB/DynamoDB for semi-structured data

- Data Warehouse: BigQuery / Snowflake / Redshift for complex analytical queries

- Serverless Functions: AWS Lambda / Cloud Functions for event-driven processing

AI/ML Operations (MLOps)

To ensure long-term sustainability and performance, we will implement:

- Continuous integration and continuous deployment (CI/CD) pipelines for machine learning models

- Automated model retraining with new data to maintain accuracy

- Model versioning and A/B testing capabilities

- Performance monitoring and drift detection

- Automated data quality checks

Technical Stack

Cloud Infrastructure

- Primary Provider: AWS / Google Cloud / Azure

- ML Platform: SageMaker / Vertex AI / Azure Machine Learning

- Storage: S3 / Cloud Storage / Data Lake Storage

- Data Warehouse: BigQuery / Snowflake / Redshift

Data Pipeline

- Ingestion: Apache Nifi, Fivetran, AWS Glue

- Orchestration: Apache Airflow

- Stream Processing: Apache Kafka, Spark Streaming

AI/ML Frameworks

- Core ML: Scikit-learn for traditional ML, TensorFlow/PyTorch for deep learning

- NLP: Hugging Face Transformers, spaCy, NLTK

- Vector Databases: Pinecone, Milvus, or Weaviate for semantic search

- Recommendation Systems: Custom collaborative and content-based filtering

Data Storage

- Data Lake: S3 / Cloud Storage

- Relational DB: PostgreSQL / MySQL

- NoSQL: MongoDB / DynamoDB

- Analytics: BigQuery / Snowflake / Redshift

Application Layer

- Backend: Python (Django/Flask) or Node.js

- Frontend: React, Angular, or Vue.js

- APIs: RESTful and GraphQL endpoints

Implementation Roadmap

Phase 1: Foundation (Months 1-3)

- Cloud infrastructure setup

- Data pipeline development for core sources

- Initial data lake population

- Basic entity extraction and standardization

Phase 2: Intelligence Layer (Months 4-6)

- Lead scoring model development and training

- Recommendation engine implementation

- Real-time monitoring infrastructure

- Initial user interface development

Phase 3: Refinement and Scale (Months 7-9)

- MLOps pipeline implementation

- Advanced feature development

- User testing and feedback integration

- Performance optimization

Phase 4: Launch and Optimization (Months 10-12)

- Full platform deployment

- Sales team training and onboarding

- Continuous model improvement

- Feature expansion based on user feedback

Expected Outcomes

- Increased Sales Efficiency: 40-60% reduction in time spent on lead qualification

- Higher Conversion Rates: 25-35% improvement through better targeting

- Enhanced Relevance: Personalized outreach based on real-time research activity

- Competitive Advantage: First-mover advantage in AI-driven sales intelligence

- Scalable Growth: Platform architecture supports expansion to adjacent markets

Why DreamozTech

DreamozTech brings deep expertise in:

- AI/ML platform development and deployment

- Large-scale data pipeline architecture

- NLP and information extraction from scientific literature

- Cloud-native, scalable system design

- MLOps and production ML systems

We are committed to being a true technology partner, not just a vendor—delivering a platform that evolves with your business needs and maintains cutting-edge capabilities through continuous innovation.

Next Steps

We welcome the opportunity to discuss this proposal in detail and answer any questions about our technical approach, implementation timeline, or expected outcomes.

Contact Information: DreamozTech